LangChain: A revolution in Conversational AI

The world of chatbots and Large Language Models (LLMs) has recently undergone a spectacular evolution. With ChatGPT, developed by OpenAI, being one of the most notable examples, the technology has managed to reach over 1.000.000 users in just five days. This rise underlines the growing interest in conversational AI and the unprecedented possibilities that LLMs offer.

LLMs and ChatGPT: A Short Introduction

Large Language Models (LLMs) and chatbots are concepts that have become indispensable in the world of artificial intelligence these days. They represent the future of human-computer interaction, where LLMs are powerful AI models that understand and generate natural language, while chatbots are programs that can simulate human conversations and perform tasks based on textual input. ChatGPT, one of the notable chatbots, has gained immense popularity in a short period of time.

LangChain: the Bridge to LLM Based Applications

LangChain is one of the frameworks that enables to leverage the power of LLMs for developing and supporting applications. This open-source library, initiated by Harrison Chase, offers a generic way to address different LLMs and extend them with new data and functionalities. Currently available in Python and TypeScript/JavaScript, LangChain is designed to easily create connections between different LLMs and data environments.

LangChain Core Concepts

To fully understand LangChain, we need to explore some core concepts:

- Chains: LangChain is built on the concept of a chain. A chain is simply a generic sequence of modular components. These chains can be put together for specific use cases by selecting the right components.

- LLMChain: The most common type of chain within LangChain is the LLMChain. This consists of a PromptTemplate, a Model (which can be an LLM or a chat model) and an optional OutputParser.

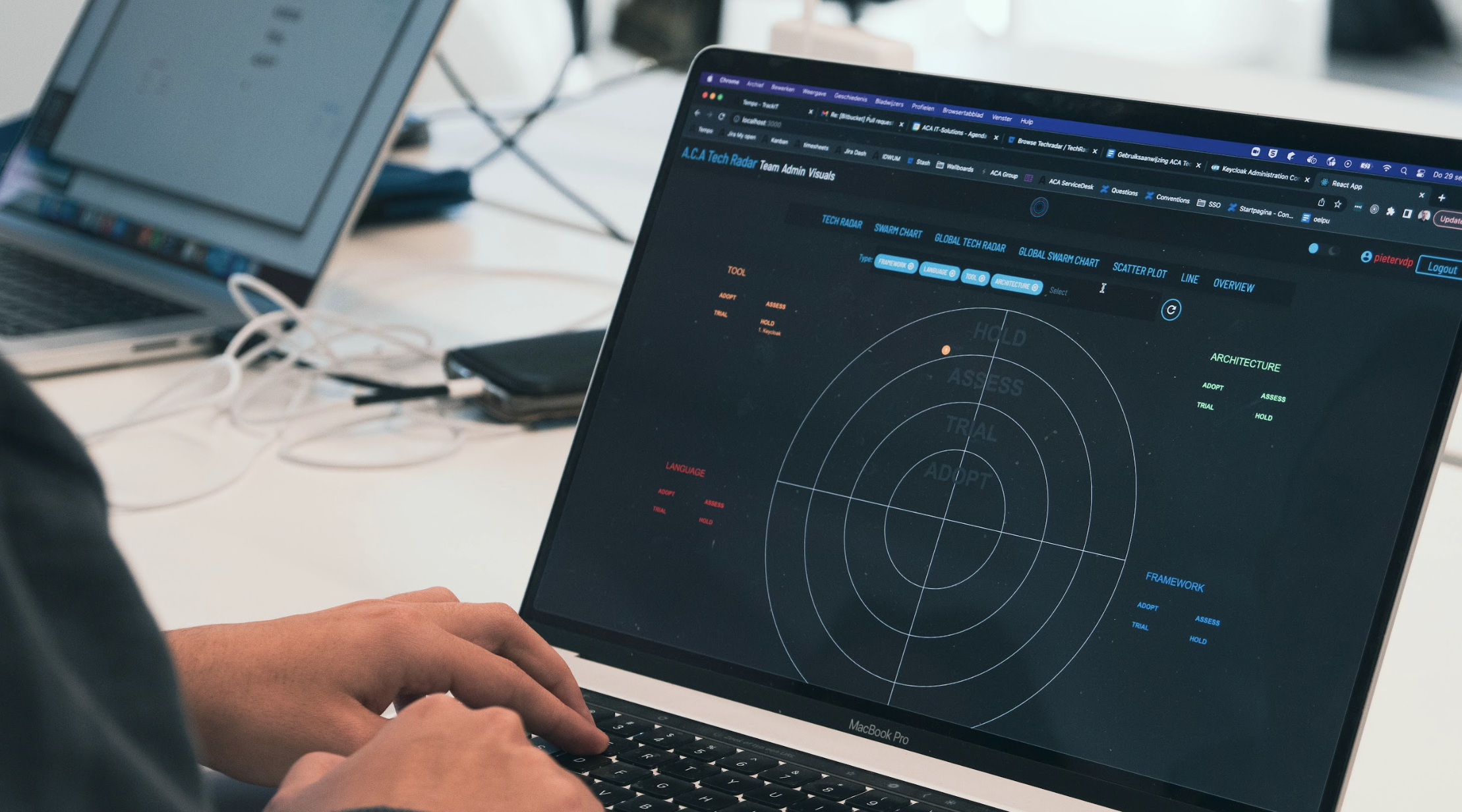

A PromptTemplate is a template used to generate a prompt for the LLM. Here's an example:

This template allows the user to fill in a topic, after which the completed prompt is sent as input to the model.

LangChain also offers ready-made PromptTemplates, such as Zero Shot, One Shot and Few Shot prompts. - Model and OutputParser: A model is the implementation of an LLM model itself. LangChain has several implementations for LLM models, including OpenAI, GPT4All, and HuggingFace.

It is also possible to add an OutputParser to process the output of the LLM model. For example, a ListOutputParser is available to convert the output of the LLM model into a list in the current programming language.

Data Connectivity in LangChain

To give the LLM Chain access to specific data, such as internal data or customer information, LangChain uses several concepts:

- Document Loaders

Document Loaders allow LangChain to retrieve data from various sources, such as CSV files and URLs. - Text Splitter

This tool splits documents into smaller pieces to make them easier to process by LLM models, taking into account limitations such as token limits. - Embeddings

LangChain offers several integrations for converting textual data into numerical data, making it easier to compare and process. The popular OpenAI Embeddings is an example of this. - VectorStores

This is where the embedded textual data is stored. These could, for example, be data vector stores, where the vectors represent the embedded textual data. FAISS (from Meta) and ChromaDB are some more popular examples. - Retrievers

Retrievers make the connection between the LLM model and the data in VectorStores. They retrieve relevant data and expand the prompt with the necessary context, allowing context-aware questions and assignments.

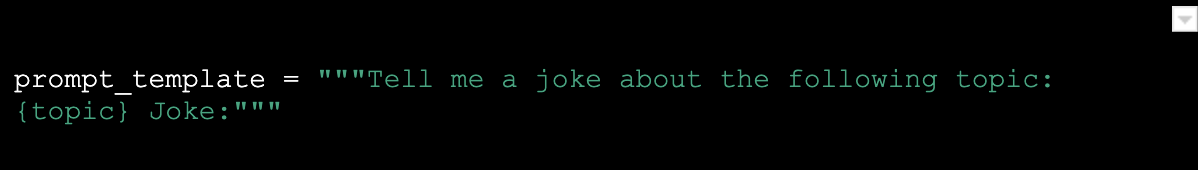

An example of such a context-aware prompt looks like this:

Demo Application

To illustrate the power of LangChain, we can create a demo application that follows these steps:

- Retrieve data based on a URL.

- Split the data into manageable blocks.

- Store the data in a vector database.

- Granting an LLM access to the vector database.

- Create a Streamlit application that gives users access to the LLM.

Below we show how to perform these steps in code:

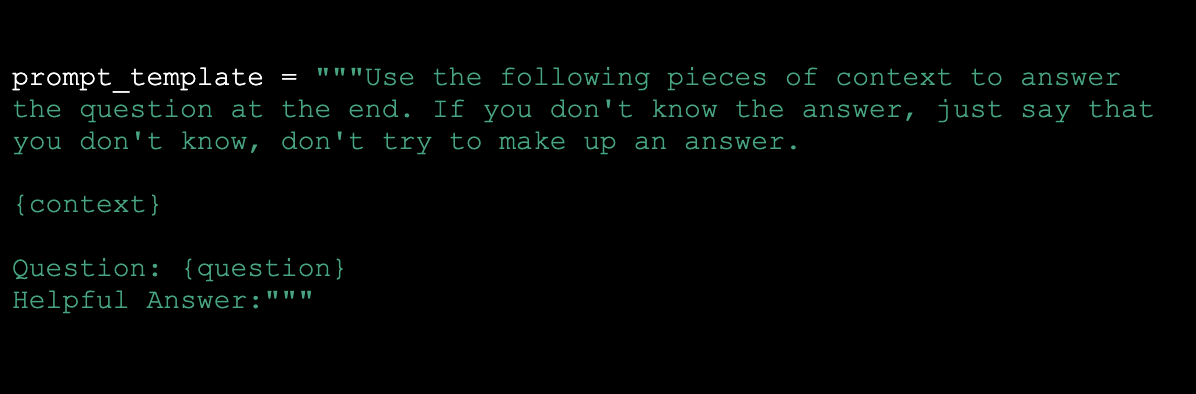

1. Retrieve Data

Fortunately, retrieving data from a website with LangChain does not require any manual work. Here's how we do it:

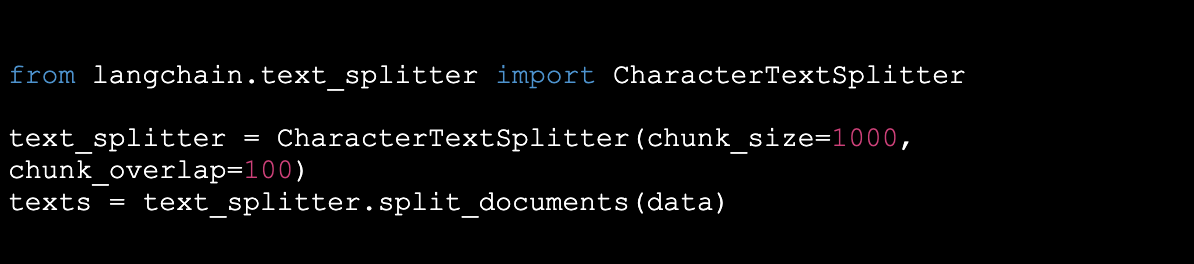

2. Split Data

The resulting data field above now contains a collection of pages from the website. These pages contain a lot of information, sometimes too much for the LLM to work with, as many LLMs work with a limited number of tokens. Therefore, we need to split up the documents:

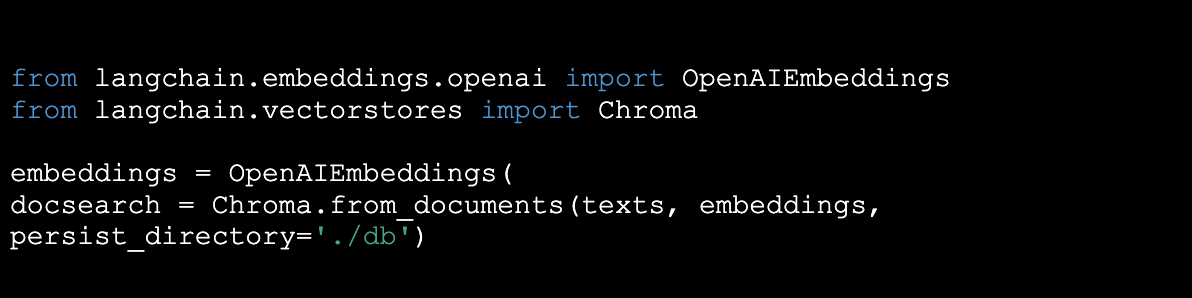

3. Store Data

Now that the data has been broken down into smaller contextual fragments, to provide efficient access to this data to the LLM, we store it in a vector database. In this example we use Chroma:

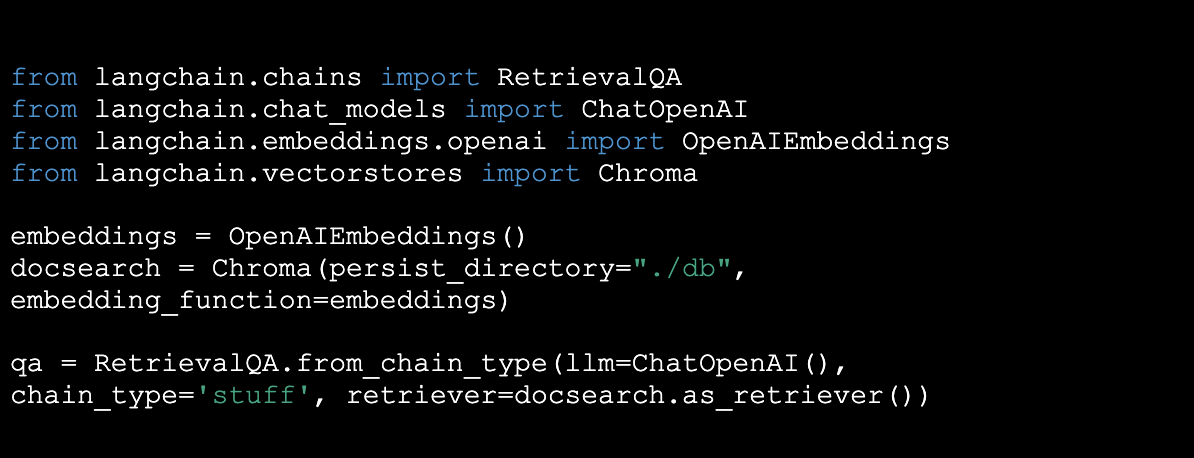

4. Grant Acces

Now that the data is saved, we can build a "Chain" in LangChain. A chain is simply a series of LLM executions to achieve the desired outcome. For this example we use the existing RetrievalQA chain that LangChain offers. This chain retrieves relevant contextual fragments from the newly built database, processes them together with the question in an LLM and delivers the desired answer:

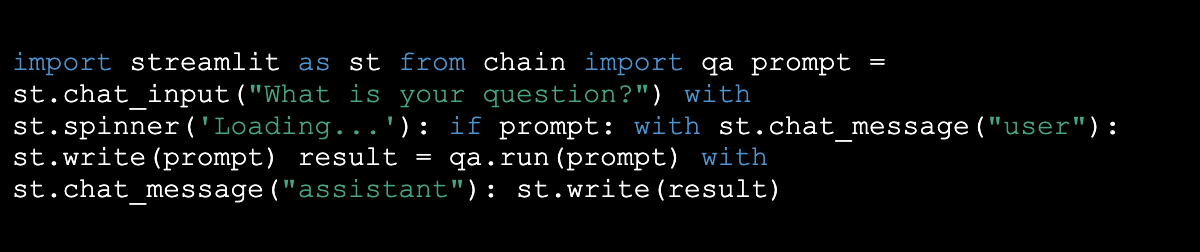

5. Create Streamlit Application

Now that we've given the LLM access to the data, we need to provide a way for the user to consult the LLM. To do this efficiently, we use Streamlit:

Agents and Tools

In addition to the standard chains, LangChain also offers the option to create Agents for more advanced applications. Agents have access to various tools that perform specific functionalities. These tools can be anything from a "Google Search" tool to Wolfram Alpha, a tool for solving complex mathematical problems. This allows Agents to provide more advanced reasoning applications, deciding which tool to use to answer a question.

Alternatives for LangChain

Although LangChain is a powerful framework for building LLM-driven applications, there are other alternatives available. For example, a popular tool is LlamaIndex (formerly known as GPT Index), which focuses on connecting LLMs with external data. LangChain, on the other hand, offers a more complete framework for building applications with LLMs, including tools and plugins.

Conclusion

LangChain is an exciting framework that opens the doors to a new world of conversational AI and application development with Large Language Models. With the ability to connect LLMs to various data sources and the flexibility to build complex applications, LangChain promises to become an essential tool for developers and businesses looking to take advantage of the power of LLMs. The future of conversational AI is looking bright, and LangChain plays a crucial role in this evolution.

Questions about LangChain?