Automated testing: make business & IT love each other

Automated testing, when done right, eliminates the increased risk and additional problems manual testing introduces. In this blog post, we disprove the misplaced belief that manual testing and evidence collection adds a lot of value.

At ACA, we embrace an agile methodology. As such, we work closely together with our customers to deliver solutions that solve their actual business problems. Moreover, we strive to solve them fast. This approach has shown its value time and time again, because it captures as much feedback as soon as possible.

Some customers historically enforce thorough manual user acceptance testing right before a software change can go live. The reason is obvious: their business greatly depends on this software, so any risk and financial impact resulting from it should be ruled out. So manual testing after finishing all development makes perfect sense, right?

Or not? Counterintuitively, our team has often witnessed that manual testing indirectly causes more risk and problems, not less! We would even go so far as to say it’s like a virus. A virus that infects every part of the organisation, often completely unnoticed! How is this possible? Because the manual testing isn’t just the final check it was meant to be. It’s a process. A process that steers the way you do change management, the way you plan resources and the way you assign and shift accountability.

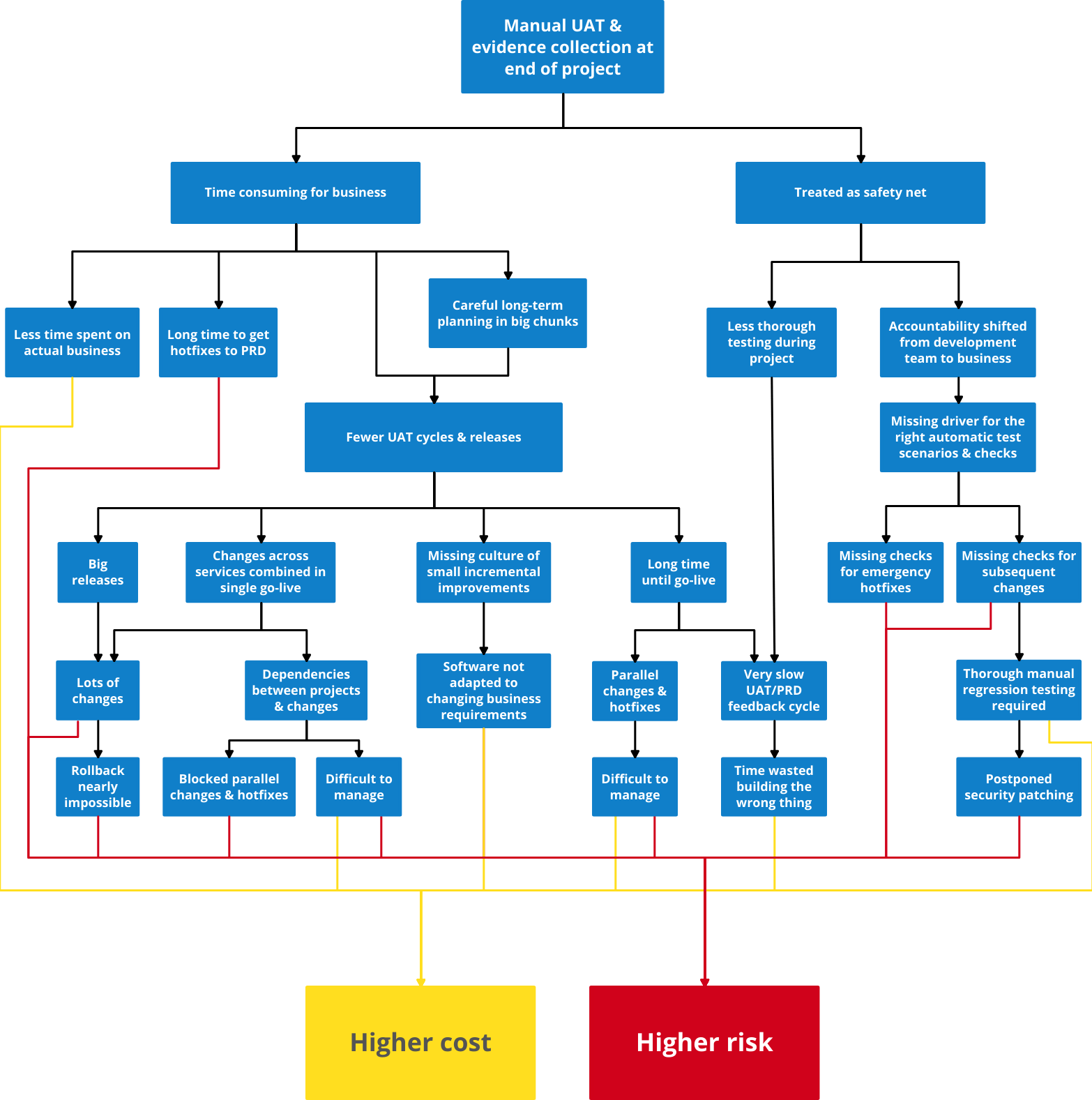

Manual testing: higher costs and risks

Perfectly clear, right?

Maybe not. Let me summarize:

- Because manual testing is time consuming, it results in too few and therefore too big releases. This causes:

- Risky go-lives

- Slow feedback

- Tricky dependencies, complicating hotfixes

- Due to the additional safety net, accountability shifts away from the development team. This inevitably leads to missing automated test scenarios and checks, causing:

- Insufficient confidence for future go-lives

- Mandatory (or missing) manual regression testing with future changes

- Bugs (over time)

All of this costs real money and introduces real risks!

Putting automated tests in the driver's seat

Instead of trying to tackle each issue at the leaves, we tried to take a relatively small action to tackle the problem at the root. We tried to eliminate the misplaced belief that this type of manual testing and manual evidence collection adds a lot of value.

We already had a huge amount of automated tests as part of our test-driven development lifecycle. So how could we start leveraging these? We started by explaining our current testing habits to the business users. Then for a concrete project, we started defining scenarios together and finally we provided them with continuous insight in the results.

Step 1: teach the business about automated testing

Which kinds of automated tests are already in place? Which guarantees do they provide? And which guarantees do they not provide?

The business deals with their business. They don’t know application architecture, never mind what an automated test actually is. You can’t see (most) automated tests, so how could you ever trust them? As a result, reducing reliance on manual testing is not about changing some practices and adding some checks. At the core, it’s about providing insight and earning trust.

More specifically, we explained the following:

- We work test-driven: not a single feature is implemented before a test is in place. This test should also fail before the feature is implemented, to ensure that the right thing is being tested.

- It is impossible to deliver a change without all tests passing (i.e. what we developers call a successful ‘build’). Not only new tests for a new story, but also the tests of all past stories.

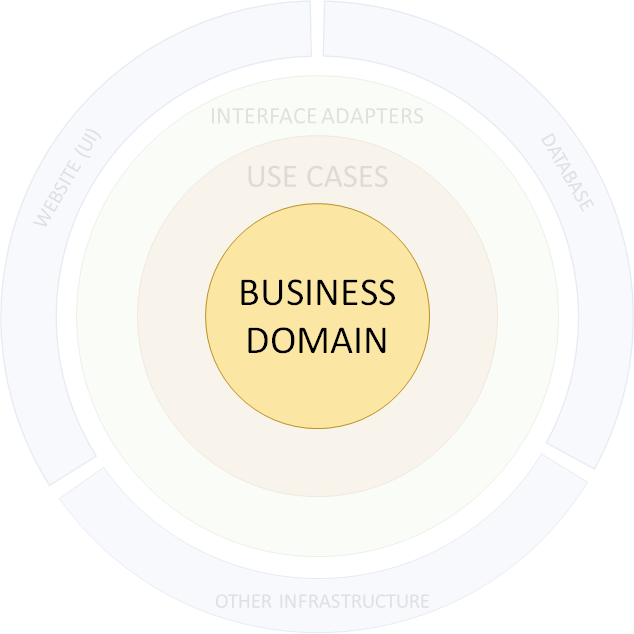

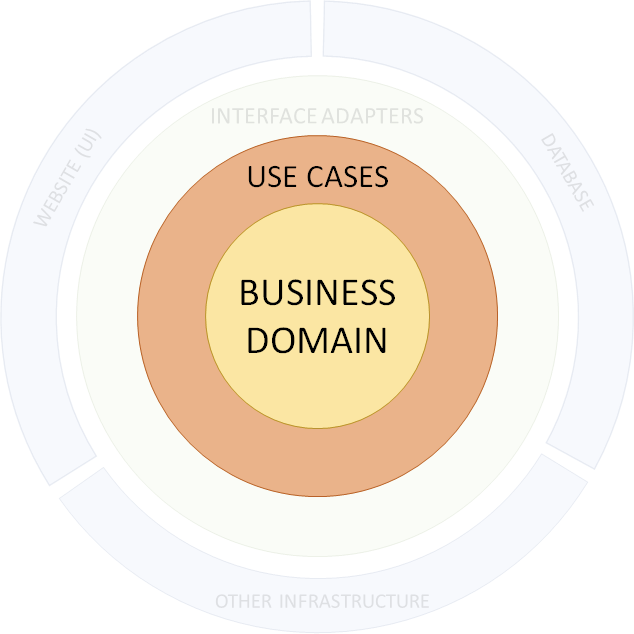

- Our applications are layered and each layer provides its own guarantees. For the technical reader: we use a variant on hexagonal architecture of which the decoupling from technology proved to align perfectly with providing business guarantees.

Our business domain layer is completely unaware of any technology or infrastructure, so those things can’t possibly break the guarantees given by the tests at this layer.

Our use case layer relies on infrastructure only in high-level business terms, e.g. on something that can ‘save’ or ‘find’ things. The tests guarantee correct results for the actions and queries in this layer, given correct infrastructure.

Concrete technology/infrastructure choices are plugins at the borders of our applications, in the same way that a printer is plugged into a computer: Excel does not know the model of your printer, it just knows that it can print. If the plugins (‘printer’) work, the use cases (actions and queries) will also work.

We also test all layers together using click-through-UI tests. As they can be shown visually and cover all parts in integration, these tests provide a lot of confidence for the business.

There are some gaps in our automated testing strategy. Some integrations with infrastructure or external services can’t be verified easily in an automated way. This is where manual testing needs to be focused.

The business should not waste their time by checking whether a certain message appears when you click on a certain button.

The purpose is not to turn business users into developers, but to show them why the trust of developers in these tests is self-evident. So instead of overwhelming them with technical details, ask what they are concerned about and provide answers patiently. They won’t remember all the details, nor should they. But the trust will stick. And only after earning trust, they’ll accept changing testing habits. Even more so, we found them asking for it themselves!

Step 2: Determine test scenarios together

Which scenarios are business critical? Which checks are necessary to provide sufficient confidence to go live? These are questions we now address during story elaboration. Consequently, the resulting automated tests now have become a deliverable of each story.

Manual tests often focus on long, end-to-end business processes. Even if each part of the process was proven to work perfectly in isolation and you were the person to flip the switch in production: would you feel 100% confident? For those brave enough to answer yes: it’s either because you know everything in detail, or you don’t know enough!

As such, we focused on the same end-to-end business flows, simulated by clicking through the integrated application (excluding only some minor external services).

Step 3: Provide continuous insight

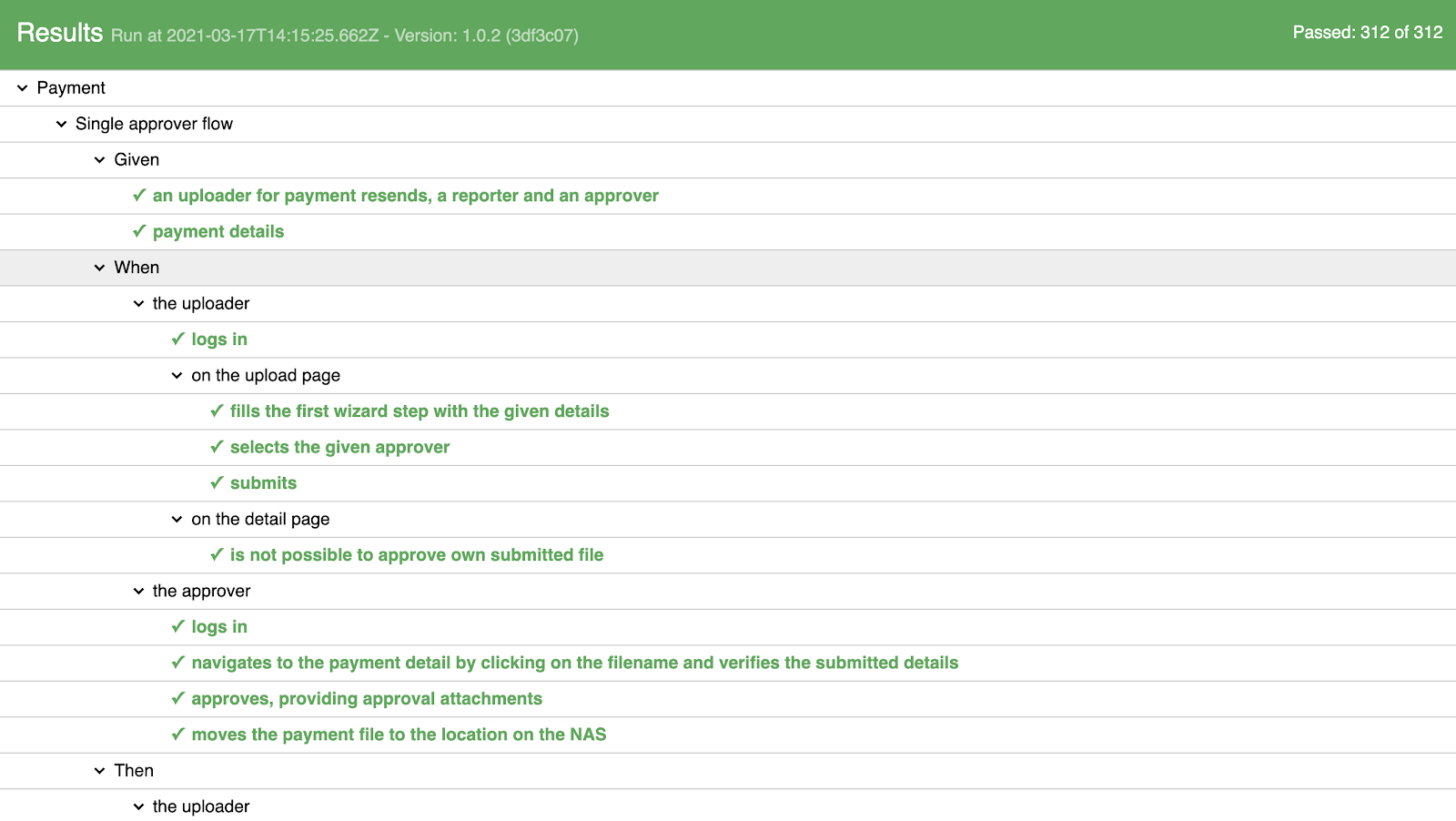

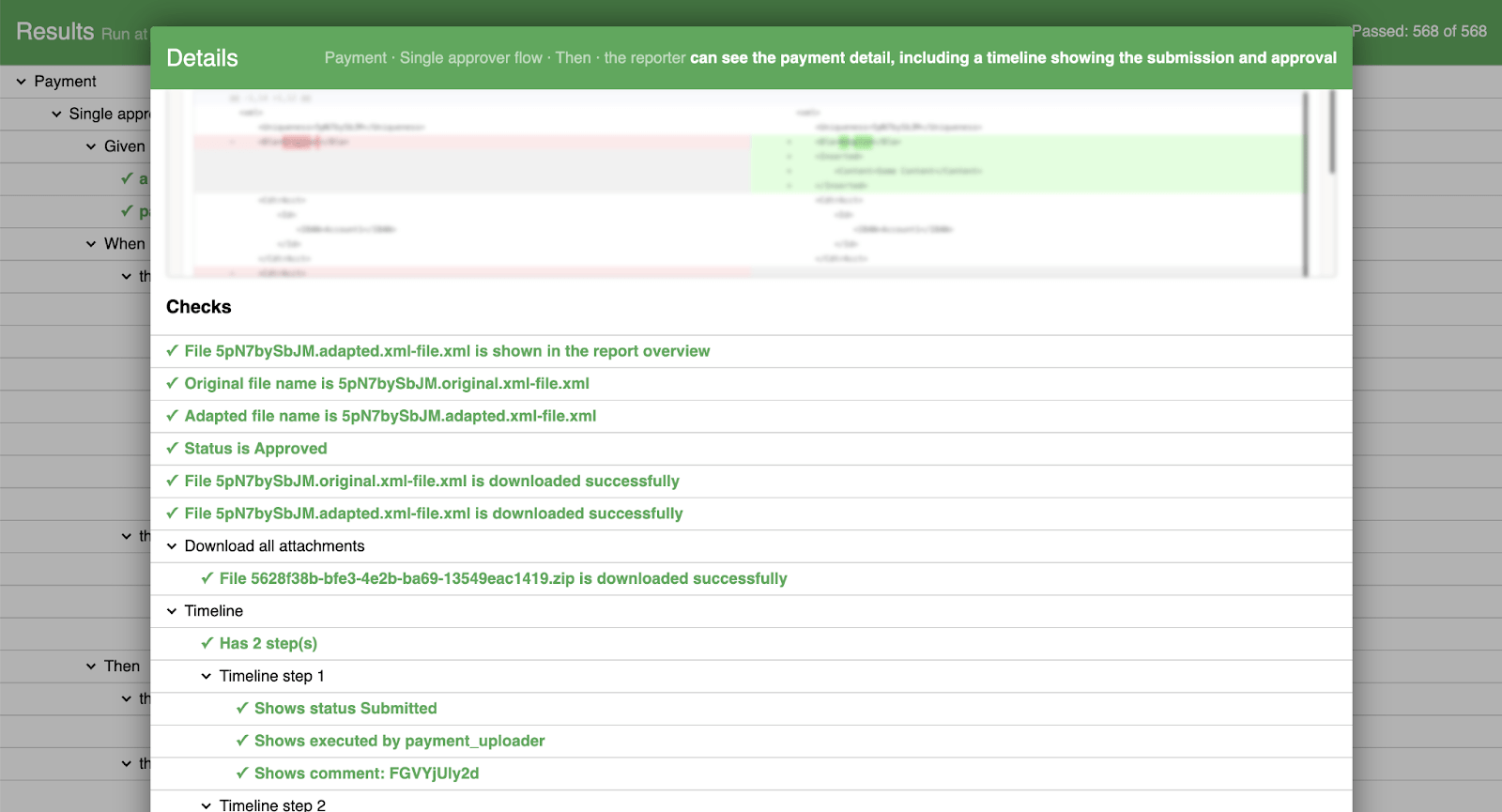

The right automated test scenarios were now decided upon, but how could the business users know that these had been implemented correctly? And how could we provide them with evidence, which they were required to document? Therefore, as a last step we introduced a detailed test report, showing all executed steps in Given-When-Then form.

Clicking on a step opens a popup with screenshots and a log of all executed checks. This provides our business users with evidence and confidence that the correct things have been checked.

✅ Positive effects of introducing automated testing

We introduced this new way of working together about 6 months ago, for multiple waves of development on a new application. Throughout this project, we have noticed a lot of positive effects:

- Less time wasted on manual testing.

- Increased confidence. We already wrote a lot of tests before, but this new approach drives us towards the right scenarios and checks: the ones that matter most to our end users. Since there is no longer a mismatch, the business users as well as the developers have a lot more confidence to go live.

- Concrete evidence. Whenever we need to do a change (functional or technical, e.g. upgrades), the first questions that come up always are: “What is the impact? Could this break something?” Well, now we can just refer to the test report, which covers all important business flows.

- Simpler planning. Due to a smaller time dependency on business, we can take up some changes sooner or shift timelines in a more flexible way.

- Exposed testing blind spots. Since the developers are now more accountable, they feel the healthy pressure to ensure there are no remaining testing blind spots. As a result, they are now covered by additional automated tests and health checks. In exceptional cases, there may be a tiny manual test targeted on the testing gap instead of an entire time-consuming business process.

- Improved story quality. The focus of the stories has shifted towards functioning business scenarios. For example, instead of a story for a submission screen already including fields to select ‘approvers’, these fields are now only added in the story that implements approvals. That way, it is harder to fall into the trap of implementing ‘features’ that actually do not add functionality.

⚠️ Pitfalls

Even though we are incredibly positive about this new way of working, there are some remaining pitfalls to look out for.

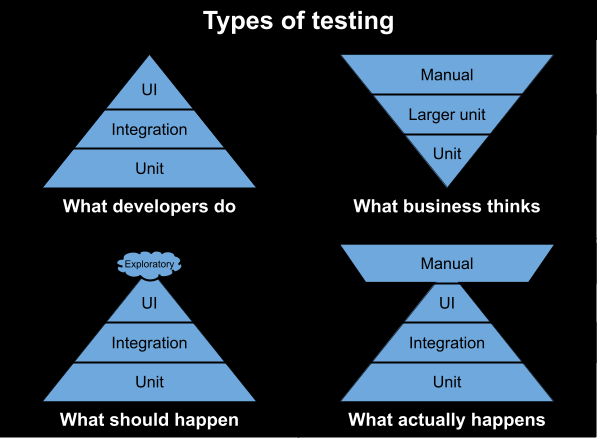

- Too many end-to-end tests. The testing pyramid promotes writing most tests at the unit level because unit tests are faster, easier to debug and less prone to random failures unrelated to the application code. We tried to limit the end-to-end scenarios by taking a risk-based approach: if a broken feature does not pose any risk, tests at the lower levels (e.g. unit tests) are sufficient. For example, if a cancel button doesn’t work, the user can just close their browser window.

- Natural urge to regress to manual testing. After all, for small changes, manual testing and manual evidence collection doesn’t take that much time. However, such tests likely won’t be repeated for future changes. Therefore, it’s important to treat them as exploratory testing instead of a safety net, thus keeping accountability on the team and agreed-upon test scenarios.

➡️ Next steps

We feel this new way of working is a huge improvement, but it is just the beginning. We see the following next steps:

- Reduce focus on the end-to-end level. We would like to provide insight in selected tests at lower levels, keeping focus on the cheapest tests that provide sufficient confidence.

- Create a habit of discussing test scenarios and checks together with the business, so that it becomes the standard way of working.

- Pursue the shift left. Agreed-upon test scenarios should become the one and only condition for going live, replacing time-consuming go-live approvals from different stakeholders. This should reduce our time-to-production for hotfixes and features drastically, eventually putting our customer on a path to continuous delivery.

- Treat software as a living product. Instead of only planning and approving changes in chunks of big “projects”, it should become a habit to continuously evolve and adapt applications to changing business needs.

What do you think? What do you (not) like of our approach? Let us know!